The Equilibrium Trap: Why Your Organization Rejects AI

Try engaging with this content through a Simulator and an AI Decoder!

Part I — Why AI Initiatives Stall

Your organization is doing exactly what stable systems do: defending itself against disruption.

A pilot succeeds. Leadership gets excited and authorizes expansion. IT budgets inflate while business units take cuts to fund the initiative. Six months later, the project sits in a holding pattern labeled “learning”—useful mainly as a reference point for the next attempt.

This pattern repeats everywhere. The explanations are familiar: adoption challenges, data quality issues, unclear ROI. These are symptoms. The underlying cause is structural: organizations that successfully execute mergers, product launches, and regulatory submissions consistently struggle to turn AI pilots into lasting operational change because AI requires a different kind of organizational capacity.

Your Organization Is an Equilibrium

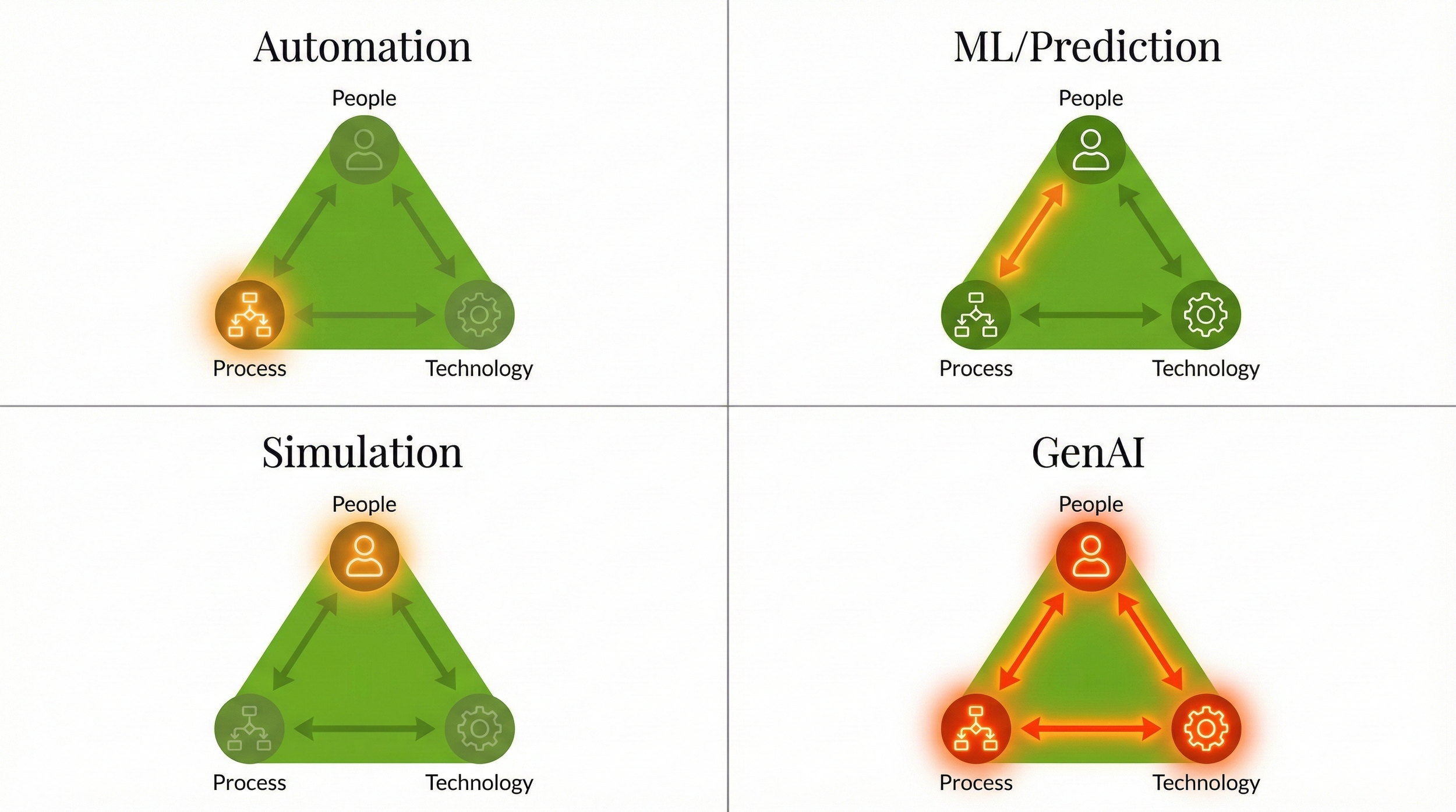

Every organization is a negotiated settlement between People, Process, and Technology. Over years, these three elements optimize against each other until they reach a stable configuration—an equilibrium where everything more or less works.

People optimize for manageable workloads and acceptable personal risk. Processes optimize for predictability and minimal exceptions. Technology optimizes for low maintenance and limited integration burden. Each accommodation reinforces the others. Game theorists call this a Nash equilibrium: no single player can improve their position by changing strategy alone.

Governance formalizes this equilibrium. Decision rights, approval thresholds, escalation paths—these define what the system will defend.

Here’s what this looks like in practice: A pharma company implements a new system to accelerate regulatory submissions. The technology works. But approval thresholds remain unchanged. Exception handling stays undefined. So people keep doing what they did before—just with an extra system to click through. Cycle times hold steady.

Why Generative AI Requires More

Previous automation waves fit into existing structures. RPA automated discrete tasks with clear boundaries. Predictive models offered recommendations that humans could accept or ignore. Simulation tools let people explore scenarios without committing.

Generative AI produces outputs that look like human judgment—drafts, analyses, recommendations—requiring accountability structures that most organizations have yet to build. It participates in the workflow.

Ask who owns a GenAI output. Is it the person who wrote the prompt? The person who reviewed it? The vendor who built the model? When the output is wrong, who’s accountable? Organizations that answer these questions explicitly capture value—and the answers unlock deployment speed, user adoption, and scalable governance.

“The evidence: A unified Regulatory Information Management System (RIMS) implementation can reduce time spent searching for information by 40% and cut team involvement in change processes by more than 50%.¹ The technology delivers—when organizations redesign workflows to receive it. The 48% of AI projects that reach production² share a common pattern: they adapted governance and workflows alongside the technology.”

“The scale of the opportunity: 70% of GenAI projects that move past proof-of-concept do so because they defined governance upfront—clear ownership, explicit error thresholds, and bounded scope.² The technology works when the organization has a place for it.”

The Real Requirement

AI initiatives stall because they’re introduced into systems with no structural place for them.

What works is redesigning how work, authority, and accountability interact—so the organization has a place for AI to operate.

This is most visible in regulated environments. Pharmacovigilance teams have local qualified persons who act as human checkpoints—interpreting context, validating outputs, maintaining trust across jurisdictions. These roles work because decision rights are explicit, escalation paths are defined, and human judgment is embedded exactly where risk concentrates.

This is an argument for building the organizational capacity to use AI alongside buying AI. The vendor sells capability. Organizational structure determines ROI.

“The production gap: On average, 48% of AI projects make it into production, taking an average of 8 months to move from prototype to production.² Organizations that invest in readiness compress this timeline and improve success rates significantly.”

In practice: A top-20 pharmaceutical company launched generative AI initiatives. Three groups moved simultaneously: A group began building a custom solution, while another group started acquiring a commercial tool, and IT launched an enterprise pilot with a different vendor. Each group had legitimate rationale.

When the board demanded consolidation, ownership remained unclear. The company that moved fastest in this space—a competitor that started six months later—had a single owner and defined governance, and went live in nine months.

Part II — The Fitness Diagnostic

Part I explains why AI initiatives stall. Part II shows where to look.

The instinct is to start with AI capabilities—what the models can do, what competitors are deploying. Start with the work instead. Most AI gets applied to work that’s poorly understood or inconsistently governed. Diagnosis before deployment changes outcomes.

Make the Work Visible

Most organizations cannot accurately describe how their critical work actually gets done.

They can list process steps and name the systems involved. They can point to theoretical owners on an org chart. Describing how work actually moves—where it stalls, where it loops back, where handoffs create delays—requires mapping work as it happens, not as it’s documented.

This matters because AI amplifies whatever it’s applied to. Applied to a clear, well-governed workflow, it accelerates decisions and removes friction. The diagnostic ensures AI gets applied where it can succeed.

The diagnostic starts by mapping actual work. Identify roles, decision points, and handoffs. Estimate time, effort, and rework rates. Directional accuracy is enough to change decisions..

The Five Frictions

Friction is coordination cost that doesn’t reduce risk, improve quality, or change outcomes. Five types show up repeatedly:

| Friction | Pattern | Diagnostic Test |

|---|---|---|

| Coordination Theater | Meetings to prepare for meetings. Status updates no one acts on. | What percentage of time is spent talking about work versus doing work? |

| Data Scavenger Hunts | Information exists somewhere; finding it requires knowing who to ask. | Can a competent person get the information they need without sending an email? |

| Unclear Authority | Nobody knows who can say yes, so everyone escalates. | When did this workflow last produce a clear "no" that changed direction? |

| Redundant Validation | Review layers that exist because someone once wanted to reduce personal risk. | What would actually break if you removed one layer? |

| Integration Friction | Work stops so data can change format. Export, transform, re-enter. | How confident are you in the numbers you present to investors or regulators? |

These frictions cluster around specific handoffs, roles, and decision points. That’s where intervention has the highest leverage.

“The coordination tax: Knowledge workers spend 57% of their time communicating, leaving 43% for focused work.⁴ More than one-third of business meetings are considered unproductive.⁵ For U.S. businesses, unproductive meetings cost an estimated $259 billion annually.⁵”

“The data debt: Poor data quality costs U.S. businesses an estimated $3.1 trillion annually.⁷ Data practitioners spend roughly 80% of their time finding, cleaning, and organizing data.⁹”

Sort What You Find

Protective friction guards against real risk—regulatory, safety, quality. If you can name the specific requirement it satisfies, keep it and benchmark it.

Expensive friction serves a real purpose at too high a cost. The mechanism works; the economics require redesign.

Vestigial friction solved a problem that no longer exists. It persists because removing it feels riskier than keeping it.

When nobody can remember the last time a step actually mattered, you know which category it belongs to.

Red Flag Checklist: Kill Zombie Projects Today

Before the full diagnostic, check your current AI initiatives against these indicators:

In practice: A biotech executive described his most persistent frustration: getting aligned with his direct reports required three meetings minimum—one to share context, one to discuss, one to confirm decisions. He wanted AI to summarize and automate.

When we mapped the actual workflow, the problem became obvious. There was no structured handoff. Documents went out without clear ask. Comments came back without decision criteria. The "meetings" existed because the asynchronous process didn't work—not because the people were inefficient.

The fix required no technology: a one-page template specifying what decision was needed, what context was attached, and what "good enough" looked like. Review time dropped from three weeks to four days. Only then did AI assistance make sense—summarizing comments into decision-ready format for the final reviewer.

The diagnostic paid for itself before any technology was deployed.

| Red Flag | What It Means | Action |

|---|---|---|

| No named owner outside IT | No one accountable for business outcomes | Assign owner or pause |

| "Pilot" status beyond 6 months | Organization hasn't absorbed it | Set 30-day go/no-go |

| Multiple parallel initiatives, same use case | Governance gap, not technology gap | Consolidate immediately |

| Success metrics are technical (accuracy, latency) | No connection to business value | Redefine or kill |

| Users have workarounds | Tool doesn't fit workflow | Redesign or kill |

| No one can explain the governance model | Liability without value | Pause and define |

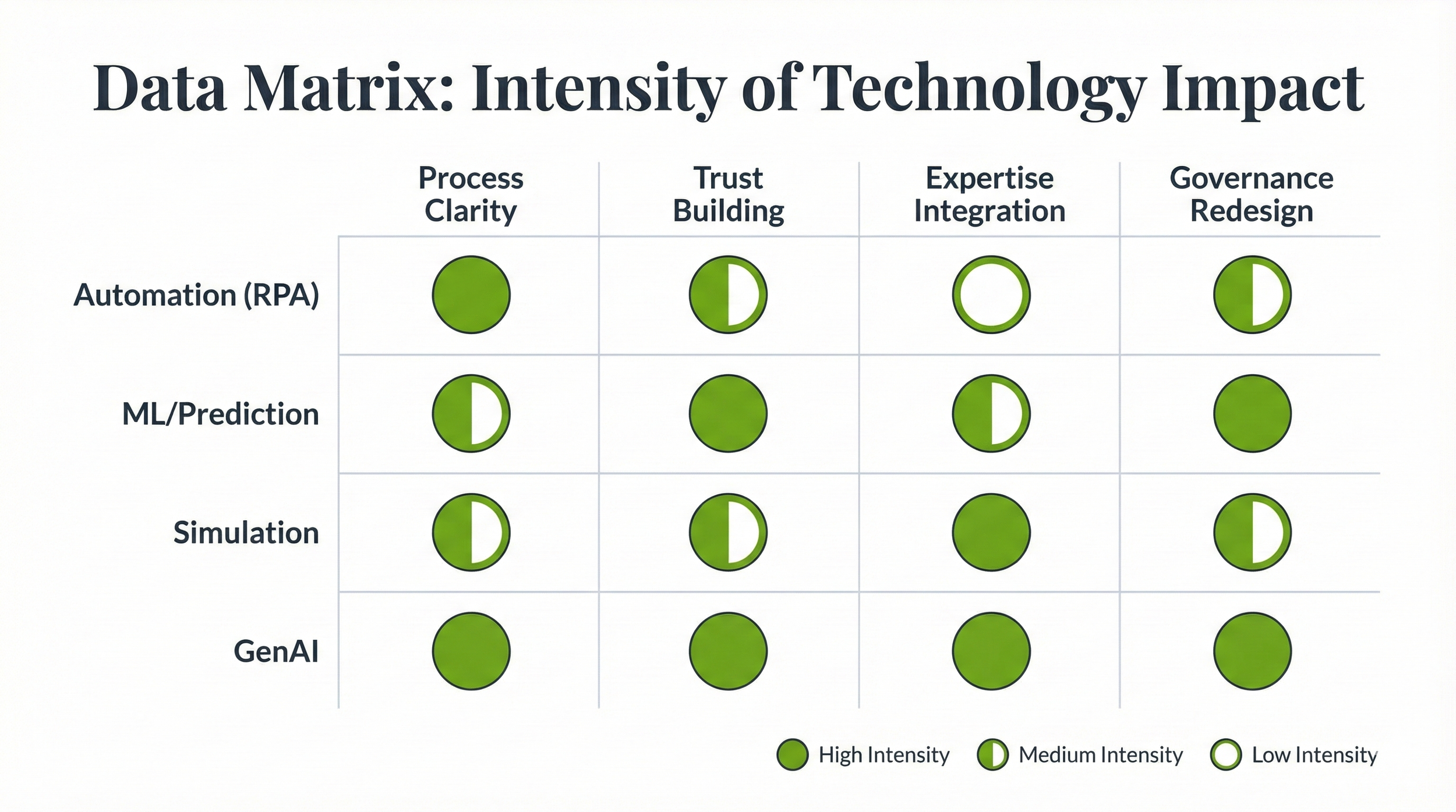

| AI Type | Best For | Organizational Constraint | Where Stress Concentrates | Investment Ratio |

|---|---|---|---|---|

| Automation (RPA) | Stable, rule-based work with documented exceptions | Process clarity | Process: workarounds emerge where exceptions aren't defined | $1 tech : $2 process documentation |

| Machine Learning | Pattern recognition in structured data (forecasting, prioritization) | Trust | People-Process boundary: override rates determine value capture | $1 tech : $1 trust-building cadence |

| Simulation | Complex system behavior with many variables | Expertise integration | People: adoption requires expert involvement in model design | $1 tech : $1 expert embedding |

| Generative AI | Judgment and synthesis requiring human-like outputs | Governance | All three simultaneously: requires explicit ownership, validation, escalation | $1 tech : $4 governance design |

Part III — Matching AI to Work (and Understanding the Stress)

You now have a map of work and friction. This constrains your options—which is good. Most AI investments fail because organizations apply capabilities to work that can’t receive them.

Different AI applies to different work, and each type stresses a different part of the organization. Understanding both determines success.

The AI Matching Framework

Automation: Process Clarity Required

When workflows are stable, well-documented, and exceptions are explicitly handled, automation delivers. RPA removes manual effort, cuts cycle time, and improves consistency.

The constraint is process clarity. The stress shows up as workarounds—side processes and local fixes that keep things moving while undermining intended gains.

The economics: 30–50% of RPA projects initially fail.¹⁰ Bot licensing ($5,000–$15,000) represents only 25–30% of total cost of ownership—the remaining 70–75% goes to infrastructure, consulting, and maintenance.¹¹ Organizations that invest in process documentation before automation see 3x better outcomes.

Machine Learning: Trust Required

When work involves recognizing patterns in structured data, ML can significantly improve decision quality.

The constraint is trust. The stress shows up as overrides—people acknowledge the prediction and do what they were going to do anyway. This is rational behavior when accountability is personal.

The economics: When humans intervene in ML forecasts, they often degrade accuracy. One study showed accuracy dropping from 86% with ML alone to 65% after human override—a 21 percentage point loss.¹³ In clinical settings, override rates for decision support alerts reach 90–93%.¹⁴

The fix: Keep a scoreboard of when the model was correct. Build a cadence around reviewing predictions versus outcomes. Trust builds through demonstrated accuracy, not assertions.

Simulation: Expertise Integration Required

When outcomes depend on interactions between many variables, simulation helps explore scenarios before committing.

The constraint is expertise integration. If domain experts aren’t involved in building the model, they won’t trust its outputs. Simulation becomes an academic exercise that informs discussion without shaping decisions.

Generative AI: Governance Required

When work requires pulling together incomplete information or producing novel outputs, GenAI applies.

The constraint is governance. GenAI produces outputs that look like human judgment, requiring structures that most organizations haven’t built: Who owns the prompt? Who validates the output? What error rate is acceptable? Where does escalation happen?

The stress concentrates everywhere simultaneously—People, Process, and Technology all take load. This is why GenAI requires the highest governance investment.

The economics: 70% of organizations attempting to scale GenAI report difficulties developing necessary governance.¹⁵ Gartner projects that 40% of agentic AI projects will fail by 2027 because organizations automate processes without redesigning governance.¹⁶

In practice: Two large pharmaceutical companies attempted similar GenAI deployments in regulatory writing.

The company that succeeded assigned a senior regulatory writer as product owner—someone who understood both the submission process and AI limitations. They defined explicit governance: who owns the prompt library, what error rate triggers escalation, how outputs get validated. They started with one document type, measured time savings against quality, and expanded only after demonstrating value.

Within eighteen months, they had reduced first-draft cycle time by 40% across their CMC organization, with the governance model now replicating to clinical regulatory.

The AI Product Owner Role

The reviewer of your current AI initiatives, the person who kills zombie projects, the owner of governance design—this is a specific role that most org charts lack.

Profile: - Domain expertise in the workflow being automated (regulatory, clinical, commercial) - Sufficient technical literacy to evaluate AI capabilities and limitations - Authority to make go/no-go decisions on scope and deployment - Accountability for business outcomes, measured in dollars

This is not: - An IT project manager - A data scientist - A “transformation lead” with dotted-line authority - A committee

If you can’t name this person for each AI initiative, you have a governance gap. Fill it before spending more on technology.

When to Sequence Organizational Redesign First

Sometimes the diagnostic reveals that friction is structural. The workflow needs redesign. Authority needs clarification. Incentives need alignment.

In these cases, organizational redesign comes first, with AI deployment sequenced after. The diagnostic identifies these situations so you invest in the right sequence.

“The maintenance trap: While 53% of enterprises have started RPA journeys, far fewer successfully scale beyond 10–50 bots due to exponential growth in maintenance complexity.¹⁷ Organizations frequently automate broken processes instead of redesigning operations—creating “sludge automation” that makes bad processes run faster.¹⁶”

“Algorithm aversion is real: Research shows humans override superior AI advice 45–50% of the time in diagnostic tasks.¹⁸ In supply chain planning, the “Forecast Value Added” of manual adjustments is often negative—meaning highly paid planners actively make forecasts worse than raw statistical output.¹⁹”

Part IV — Designing the Intervention

By now you understand the system. You’ve mapped the work, classified the friction, identified stress points. What remains is intervention.

This is where most initiatives fail for the second time. The diagnosis is right. The response is incomplete. Training gets added. Processes get updated. Technology gets deployed. Each change makes sense in isolation. The system absorbs all of them and returns to equilibrium.

Intervention works when you move the system in a coordinated way.

Move People, Process, and Technology Together

These three move together or they snap back. Change one without adjusting the others and you create strain.

Durable change requires synchronized movement across all three, governed by updated rules that define what’s now legitimate.

This means ensuring coherence within whatever scope you choose. If you change one workflow, change the people-process-technology configuration for that workflow together.

“Why 70% matters: BCG’s analysis reveals that approximately 70% of challenges in AI implementation stem from people and process issues. Only 10% are attributed to AI algorithms, with 20% linked to technology infrastructure.²⁰ Investing in better algorithms (the 10%) yields less than investing in absorption capacity (the 70%).”

“The change management reality: Up to 70% of AI-related change initiatives fail due to employee pushback or inadequate management support.²¹ This is not a failure of code—it’s a failure of change management. The lack of a clear “AI Product Owner” in the business line means that when the model encounters its first edge case, there is no one to defend it or refine it. The easiest path—returning to manual status quo—is taken.”

Three Levers That Work

Visibility: Make performance observable at the level where work happens. When people see cycle times, rework rates, and decision latency, accountability emerges. When AI performance is visible to the people it’s supposed to help, calibration happens naturally. This requires someone who understands both the business function and AI well enough to interpret what they’re seeing—the AI Product Owner.

Friction redesign: Make desired behavior easy. If the AI-assisted path requires more clicks than the manual path, people will use the manual path. Redesign the friction landscape so the path of least resistance is the path you want.

Incentive alignment: Align what gets rewarded with what the system needs. If people are measured on individual output while the system needs collaborative AI use, you’ll get individual optimization. Make speed, quality of supervision, and effective AI use explicit performance criteria.

GenAI Governance Template

For Generative AI specifically, answer these questions before deployment:

| Governance Element | Question | Owner |

|---|---|---|

| Prompt Ownership | Who maintains and updates the prompt library? | Named individual |

| Output Validation | What gets reviewed before use? By whom? | Defined by document type |

| Error Threshold | What error rate triggers escalation vs. iteration? | Quantified (e.g., <5% data-preserve-html-node="true" hallucination) |

| Escalation Path | When something fails, who decides next steps? | Named individual with authority |

| Feedback Loop | How do failures reach someone who can change the design? | Defined cadence and channel |

| Scope Boundaries | What is explicitly out of scope for AI assistance? | Documented and communicated |

Design this governance at the same time as build/buy/partner decisions. Waiting until after capability purchase guarantees a governance gap.

Part V — Implementation

Implementation reveals whether everything you’ve designed actually works.

The failure pattern is predictable: early momentum, expanding scope, weakening governance, system reassertion. Six months later, you’re back where you started with less budget and more skepticism.

Test in Real Conditions

Live workflow testing with real constraints and accountable people produces the most useful learning. Test with the people who will actually own the results.

Short cycles matter—especially for GenAI, where the goal is learning velocity. Each cycle should answer one question: what did we learn that changes what we do next?

Build the Management System Before You Need It

When consultants leave, what remains is the management system. Building it deliberately ensures continuity and sustained performance.

Explicit ownership. Every prompt, model, workflow, and decision needs a named accountable person.

Observable performance. Accuracy, adoption, rework, trust—track these where decisions actually happen.

Compatible cadence. Build AI oversight into meetings that already exist. New rhythms that compete with existing operating rhythms lose.

Functional feedback loops. When something fails, that information must reach someone who can change the design.

“Ownership drives adoption: High-performing AI organizations are characterized by senior leaders who demonstrate strong ownership and commitment.³ AI models drift as data changes; without a dedicated owner responsible for ongoing performance, the model decays until someone turns it off.²¹”

Scale Through Learning

Scaling introduces new failure modes. What worked in one workflow can break when applied more broadly.

The safest path: expand to adjacent workflows where assumptions, roles, and governance patterns are similar enough that learning transfers.

Each implementation should make the next one cheaper and faster. When implementation #5 costs less than implementation #1, you’re building organizational capability.

The Investment Heuristic

For planning purposes, budget AI initiatives using this ratio:

| AI Type | Technology | Governance & Change | Total Multiple |

|---|---|---|---|

| RPA | $1 | $2 | 3x technology cost |

| ML | $1 | $1 | 2x technology cost |

| Simulation | $1 | $1 | 2x technology cost |

| GenAI | $1 | $4 | 5x technology cost |

If your current GenAI budget allocates $2M for technology and $500K for “change management,” you’re underfunded by $7.5M—or your scope should shrink by 80%.

“Learning curves exist: Multi-site rollouts using standardized approaches show measurable savings—one case demonstrated a 22% decrease in deployment expenses by final sites.²² Cloud migration and AI modernization can take 1–3 years to show positive ROI.²³ Organizations that persist through the J-curve capture compounding value as each implementation makes the next one faster and cheaper.”

The Bottom Line

Organizations that redesign themselves to use AI effectively create advantage.

The sequence matters:

Diagnose the work — Map how it actually moves

Identify friction — Find the five types, classify by protective/expensive/vestigial

Match AI to work — Apply the right type to the right constraint

Design the intervention — Move People, Process, and Technology together

Implement with discipline — Test in real conditions, build governance, scale through learning

This is organizational engineering. Technology is one input among several.

The equilibrium trap is real: organizations naturally defend against change. Breaking out requires coordinated movement—and the patience to build absorption capacity before deploying capability.

The companies that win are building organizations that can use AI—and that capability compounds with every deployment.

Try engaging with this content through a Simulator and an AI Decoder!

About Brinton Bio

Brinton Bio helps life sciences organizations and their investors turn ambition into operational results.

We work at the intersection of strategy, AI, and operations—where value creation happens and where most initiatives stall.

We fix stalled AI initiatives. We help investors determine if their portfolio companies are building 'Innovation Theater' or operational capacity. We measure the load-bearing capacity of the organization, redesign the governance, and turn 'Pilot' into 'Production' in 90 days.

Our approach: Decision clarity in 30 days. Proof of value in 60-90 days. Transferable assets that survive leadership changes and exits.

References

1. Kalypso, “Case Study: Unified RIM Implementation for Global Medical Device Manufacturer,” 2024.

2. Gartner, “AI Project Success Rates and GenAI Abandonment Projections,” 2024. Via Informatica analysis.

3. McKinsey, “The State of AI: Global Survey 2025.”

4. Microsoft, “Work Trend Index: Will AI Fix Work?” 2023.

5. London School of Economics, “Business Meeting Productivity Research,” October 2024.

6. Archie, “Meeting Statistics and Cost Analysis,” 2024.

7. IBM, “The Cost of Poor Data Quality in the United States,” research analysis.

8. Gartner, “Data Quality: Best Practices for Accurate Insights,” 2024.

9. Pragmatic Institute, “Overcoming the 80/20 Rule in Data Science,” industry analysis.

10. Ernst & Young, “RPA Implementation Failure Rates,” via ActiveBatch analysis.

11. Blueprint Systems, “How Much Does RPA Really Cost?” infographic analysis.

12. Digital Teammates, “Return on Investment from RPA vs. Hidden Costs,” 2024.

13. SAS Blogs, “How Machine Learning is Disrupting Demand Planning,” 2018.

14. JMIR Medical Informatics, “Appropriateness of Alerts and Physicians’ Responses with Medication-Related Clinical Decision Support,” 2022.

15. McKinsey, “Charting a Path to the Data- and AI-Driven Enterprise of 2030.”

16. Deloitte, “Tech Trends 2026” and Gartner Agentic AI projections.

17. Enterprisers Project, “Robotic Process Automation by the Numbers: 14 Interesting Stats,” 2019.

18. NIH/PMC, “The Extent of Algorithm Aversion in Decision-Making Situations,” 2023.

19. International Journal of Applied Forecasting, “Forecast Value Added: A Reality Check,” Foresight Issue 29.

20. BCG, “AI Adoption in 2024: 74% of Companies Struggle to Achieve and Scale Value,” October 2024.

21. CapTech Consulting, “Why AI Fails to Deliver ROI and the Keys to Success,” 2024; Improving, “Top 10 Reasons AI Projects Fail #8: No Ownership or Maintenance.”

22. Caliber Global, “Multi-Site Retail Rollout Cost Reduction Case Study,” 2024.

23. CloudZero, “Cloud Computing Statistics: A 2025 Market Snapshot.”

© 2026 Brinton Bio, LLC

STRATEGY • AI • OPERATIONS